Poisson distribution

From Wikipedia, the free encyclopedia

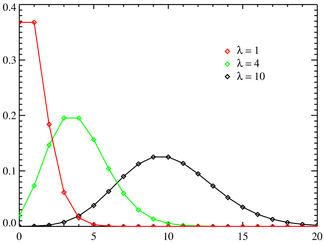

Probability mass function The horizontal axis is the index k. The function is non-zero only at integer values of k. The connecting lines are only guides for the eye and do not indicate continuity. | |

Cumulative distribution function The horizontal axis is the index k. | |

| Parameters |  |

|---|---|

| Support |  |

| Probability mass function (pmf) |  |

| Cumulative distribution function (cdf) |  (where Γ(x,y) is the Incomplete gamma function) (where Γ(x,y) is the Incomplete gamma function) |

| Mean |  |

| Median | usually about  |

| Mode |  (and λ − 1 if λ is an integer) (and λ − 1 if λ is an integer) |

| Variance |  |

| Skewness |  |

| Excess kurtosis |  |

| Entropy | ![\lambda[1\!-\!\ln(\lambda)]\!+\!e^{-\lambda}\sum_{k=0}^\infty \frac{\lambda^k\ln(k!)}{k!}](http://upload.wikimedia.org/math/4/e/8/4e81a2d738b1ecec39c1ba3cb3950168.png) |

| Moment-generating function (mgf) |  |

| Characteristic function |  |

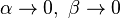

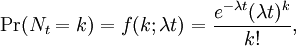

In probability theory and statistics, the Poisson distribution is a discrete probability distribution that expresses the probability of a number of events occurring in a fixed period of time if these events occur with a known average rate, and are independent of the time since the last event.

The distribution was discovered by Siméon-Denis Poisson (1781–1840) and published, together with his probability theory, in 1838 in his work Recherches sur la probabilité des jugements en matières criminelles et matière civile ("Research on the Probability of Judgments in Criminal and Civil Matters"). The work focused on certain random variables N that count, among other things, a number of discrete occurrences (sometimes called "arrivals") that take place during a time-interval of given length. The probability that there are exactly x occurrences (x being a non-negative integer, x = 0, 1, 2, ...) while the expected number of occurrences is λ equals

where

- e is the base of the natural logarithm (e = 2.71828...)

- x is the number of occurrences of an event - the probability of which is given by the function

- x! is the factorial of x

- λ is a positive real number, equal to the expected number of occurrences that occur during the given interval. For instance, if the events occur on average every 4 minutes, and you are interested in the number of events occurring in a 10 minute interval, you would use as model a Poisson distribution with λ = 10/4 = 2.5.

As a function of k, this is the probability mass function. The Poisson distribution can be derived as a limiting case of the binomial distribution.

The Poisson distribution is sometimes called a Poissonian, analogous to the term Gaussian for a Gauss or normal distribution.

Contents[hide] |

[edit] Poisson noise and characterizing small occurrences

The parameter λ is not only the mean number of occurrences  , but also its variance

, but also its variance  (see Table). Thus, the number of observed occurrences fluctuates about its mean λ with a standard deviation

(see Table). Thus, the number of observed occurrences fluctuates about its mean λ with a standard deviation  . These fluctuations are denoted as Poisson noise or (particularly in electronics) as shot noise.

. These fluctuations are denoted as Poisson noise or (particularly in electronics) as shot noise.

The correlation of the mean and standard deviation in counting independent, discrete occurrences is useful scientifically. By monitoring how the fluctuations vary with the mean signal, one can estimate the contribution of a single occurrence, even if that contribution is too small to be detected directly. For example, the charge e on an electron can be estimated by correlating the magnitude of an electric current with its shot noise. If N electrons pass a point in a given time t on the average, the mean current is I = eN / t; since the current fluctuations should be of the order  (i.e. the variance of the Poisson process), the charge e can be estimated from the ratio

(i.e. the variance of the Poisson process), the charge e can be estimated from the ratio  . An everyday example is the graininess that appears as photographs are enlarged; the graininess is due to Poisson fluctuations in the number of reduced silver grains, not to the individual grains themselves. By correlating the graininess with the degree of enlargement, one can estimate the contribution of an individual grain (which is otherwise too small to be seen unaided). Many other molecular applications of Poisson noise have been developed, e.g., estimating the number density of receptor molecules in a cell membrane.

. An everyday example is the graininess that appears as photographs are enlarged; the graininess is due to Poisson fluctuations in the number of reduced silver grains, not to the individual grains themselves. By correlating the graininess with the degree of enlargement, one can estimate the contribution of an individual grain (which is otherwise too small to be seen unaided). Many other molecular applications of Poisson noise have been developed, e.g., estimating the number density of receptor molecules in a cell membrane.

[edit] Poisson processes

Sometimes λ is taken to be the rate, i.e., the average number of occurrences per unit time. In that case, if Nt is the number of occurrences before time t then we have

and the waiting time T until the first occurrence is a continuous random variable with an exponential distribution (with parameter λ). This probability distribution may be deduced from the fact that

t)=\Pr(N_t=0)=e^{-\lambda t}.\," src="http://upload.wikimedia.org/math/2/6/9/26973364bb1d1f5b98c1cbb6f95e2517.png">

When time becomes involved, then we have a 1-dimensional Poisson process, which involves both the discrete Poisson-distributed random variables that count the number of arrivals in each time interval, and the continuous Erlang-distributed waiting times. There are also Poisson processes of dimension higher than 1.

[edit] Related distributions

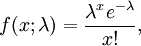

- If

and

and  , then the difference Y = X1 − X2 follows a Skellam distribution.

, then the difference Y = X1 − X2 follows a Skellam distribution. - If

and

and  are independent, and Y = X1 + X2, then the distribution of X1 conditional on Y = y is a binomial. Specifically,

are independent, and Y = X1 + X2, then the distribution of X1 conditional on Y = y is a binomial. Specifically,  . More generally, if X1, X2,..., Xn are Poisson random variables with parameters λ1, λ2,..., λn then

. More generally, if X1, X2,..., Xn are Poisson random variables with parameters λ1, λ2,..., λn then

- The Poisson distribution can be derived as a limiting case to the binomial distribution as the number of trials goes to infinity and the expected number of successes remains fixed. Therefore it can be used as an approximation of the binomial distribution if n is sufficiently large and p is sufficiently small. There is a rule of thumb stating that the Poisson distribution is a good approximation of the binomial distribution if n is at least 20 and p is smaller than or equal to 0.05. According to this rule the approximation is excellent if n ≥ 100 and np ≤ 10. [1]

- For sufficiently large values of λ, (say λ>1000), the normal distribution with mean λ, and variance λ, is an excellent approximation to the Poisson distribution. If λ is greater than about 10, then the normal distribution is a good approximation if an appropriate continuity correction is performed, i.e., P(X ≤ x), where (lower-case) x is a non-negative integer, is replaced by P(X ≤ x + 0.5).

[edit] Occurrence

The Poisson distribution arises in connection with Poisson processes. It applies to various phenomena of discrete nature (that is, those that may happen 0, 1, 2, 3, ... times during a given period of time or in a given area) whenever the probability of the phenomenon happening is constant in time or space. Examples of events that have been modelled as Poisson distributions include:

- The number of cars that pass through a certain point on a road (sufficiently distant from traffic lights) during a given period of time.

- The number of spelling mistakes one makes while typing a single page.

- The number of phone calls at a call center per minute.

- The number of times a web server is accessed per minute.

- The number of roadkill (animals killed) found per unit length of road.

- The number of mutations in a given stretch of DNA after a certain amount of radiation.

- The number of unstable nuclei that decayed within a given period of time in a piece of radioactive substance. The radioactivity of the substance will weaken with time, so the total time interval used in the model should be significantly less than the mean lifetime of the substance.

- The number of pine trees per unit area of mixed forest.

- The number of stars in a given volume of space.

- The number of soldiers killed by horse-kicks each year in each corps in the Prussian cavalry. This example was made famous by a book of Ladislaus Josephovich Bortkiewicz (1868–1931).

- The distribution of visual receptor cells in the retina of the human eye.

- The number of V2 rocket attacks per area in England, according to the fictionalized account in Thomas Pynchon's Gravity's Rainbow.

- The number of light bulbs that burn out in a certain amount of time.

- The number of viruses that can infect a cell in cell culture.

- The number of hematopoietic stem cells in a sample of unfractionated bone marrow cells.

- The inventivity of an inventor over their career.

- The number of particles that "scatter" off of a target in a nuclear or high energy physics experiment.

[edit] How does this distribution arise? — The law of rare events

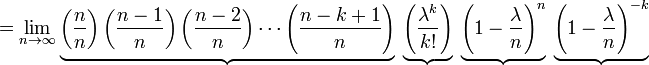

In several of the above examples—for example, the number of mutations in a given sequence of DNA—the events being counted are actually the outcomes of discrete trials, and would more precisely be modelled using the binomial distribution. However, the binomial distribution with parameters n and λ/n, i.e., the probability distribution of the number of successes in n trials, with probability λ/n of success on each trial, approaches the Poisson distribution with expected value λ as n approaches infinity. This limit is sometimes known as the law of rare events, although this name may be misleading because the events in a Poisson process need not be rare (the number of telephone calls to a busy switchboard in one hour follows a Poisson distribution, but these events would not be considered rare). It provides a means by which to approximate random variables using the Poisson distribution rather than the more-cumbersome binomial distribution.

Here are the details. First, recall from calculus that

Let p = λ/n. Then we have

As n approaches ∞, the expression over the first underbrace approaches 1; the second remains constant since "n" does not appear in it at all; the third approaches e−λ; and the fourth expression approaches 1.

Consequently the limit is

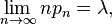

More generally, whenever a sequence of binomial random variables with parameters n and pn is such that

the sequence converges in distribution to a Poisson random variable with mean λ (see, e.g., law of rare events).

[edit] Properties

- The expected value of a Poisson-distributed random variable is equal to λ and so is its variance. The higher moments of the Poisson distribution are Touchard polynomials in λ, whose coefficients have a combinatorial meaning. In fact when the expected value of the Poisson distribution is 1, then Dobinski's formula says that the nth moment equals the number of partitions of a set of size n.

- The mode of a Poisson-distributed random variable with non-integer λ is equal to

, which is the largest integer less than or equal to λ. This is also written as floor(λ). When λ is a positive integer, the modes are λ and λ − 1.

, which is the largest integer less than or equal to λ. This is also written as floor(λ). When λ is a positive integer, the modes are λ and λ − 1.

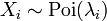

- Sums of Poisson-distributed random variables:

- If

follow a Poisson distribution with parameter

follow a Poisson distribution with parameter  and Xi are independent, then

and Xi are independent, then  also follows a Poisson distribution whose parameter is the sum of the component parameters.

also follows a Poisson distribution whose parameter is the sum of the component parameters.

- The moment-generating function of the Poisson distribution with expected value λ is

- All of the cumulants of the Poisson distribution are equal to the expected value λ. The nth factorial moment of the Poisson distribution is λn.

- The Poisson distributions are infinitely divisible probability distributions.

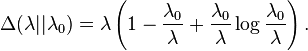

- The directed Kullback-Leibler divergence between Poi(λ0) and Poi(λ) is given by

[edit] Generating Poisson-distributed random variables

A simple way to generate random Poisson-distributed numbers is given by Knuth, see References below.

algorithm poisson random number (Knuth):

init:

Let L ← e−λ, k ← 0 and p ← 1.

do:

k ← k + 1.

Generate uniform random number u and let p ← p × u.

while p ≥ L

return k − 1.

While simple, the complexity is linear in λ. There are many other algorithms to overcome this. Some are given in Ahrens & Dieter, see References below.

[edit] Parameter estimation

[edit] Maximum likelihood

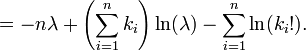

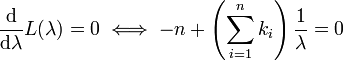

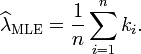

Given a sample of n measured values ki we wish to estimate the value of the parameter λ of the Poisson population from which the sample was drawn. To calculate the maximum likelihood value, we form the log-likelihood function

Take the derivative of L with respect to λ and equate it to zero:

Solving for λ yields the maximum-likelihood estimate of λ:

Since each observation has expectation λ so does this sample mean. Therefore it is an unbiased estimator of λ. It is also an efficient estimator, i.e. its estimation variance achieves the Cramér-Rao lower bound (CRLB).

[edit] Bayesian inference

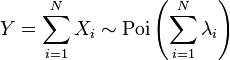

In Bayesian inference, the conjugate prior for the rate parameter λ of the Poisson distribution is the Gamma distribution. Let

denote that λ is distributed according to the Gamma density g parameterized in terms of a shape parameter α and an inverse scale parameter β:

0 \,\!." src="http://upload.wikimedia.org/math/0/b/3/0b33c261354ca17dfc8f764f3555f38f.png">

Then, given the same sample of n measured values ki as before, and a prior of Gamma(α, β), the posterior distribution is

The posterior mean E[λ] approaches the maximum likelihood estimate  in the limit as

in the limit as  .

.

The posterior predictive distribution of additional data is a Gamma-Poisson (i.e. negative binomial) distribution.

[edit] The "law of small numbers"

The word law is sometimes used as a synonym of probability distribution, and convergence in law means convergence in distribution. Accordingly, the Poisson distribution is sometimes called the law of small numbers because it is the probability distribution of the number of occurrences of an event that happens rarely but has very many opportunities to happen. The Law of Small Numbers is a book by Ladislaus Bortkiewicz about the Poisson distribution, published in 1898. Some historians of mathematics have argued that the Poisson distribution should have been called the Bortkiewicz distribution.[citation needed]

[edit] See also

- Compound Poisson distribution

- Poisson process

- Poisson regression

- Queueing theory

- Erlang distribution which describes the waiting time until n events have occurred. For temporally distributed events, the Poisson distribution is the probability distribution of the number of events that would occur within a preset time, the Erlang distribution is the probability distribution of the amount of time until the nth event.

- Skellam distribution, the distribution of the difference of two Poisson variates, not necessarily from the same parent distribution.

- Incomplete gamma function used to calculate the CDF.

- Dobinski's formula (on combinatorial interpretation of the moments of the Poisson distribution)

- Schwarz formula

[edit] References

- ^ NIST/SEMATECH, '6.3.3.1. Counts Control Charts', e-Handbook of Statistical Methods, <http://www.itl.nist.gov/div898/handbook/pmc/section3/pmc331.htm> [accessed 25 October 2006]

- Donald E. Knuth (1969). Seminumerical Algorithms, The Art of Computer Programming, Volume 2. Addison Wesley.

- Joachim H. Ahrens, Ulrich Dieter (1974). "Computer Methods for Sampling from Gamma, Beta, Poisson and Binomial Distributions". Computing 12 (3): 223--246. DOI:10.1007/BF02293108.

- Joachim H. Ahrens, Ulrich Dieter (1982). "Computer Generation of Poisson Deviates". ACM Transactions on Mathematical Software 8 (2): 163--179. DOI:10.1145/355993.355997.

[edit] External links

- Law of rare events on PlanetMath

- Queueing Theory Basics

- M/M/1 Queueing System

- Engineering Statistics Handbook: Poisson Distribution

- xkcd webcomic involving a Poisson distribution

- Poisson Distribution at QWiki

- Poisson Derivation 1: Continuous limit of a Binomial distribution

- Poisson Derivation 2: Generating function approach

- Poisson Derivation 3: Summation of the waiting-time distribution

1 comment:

Thanks for this information on Poisson's distribution as it has wide application in mathematics and science.

poisson distribution examples

Post a Comment